Bingyi Kang

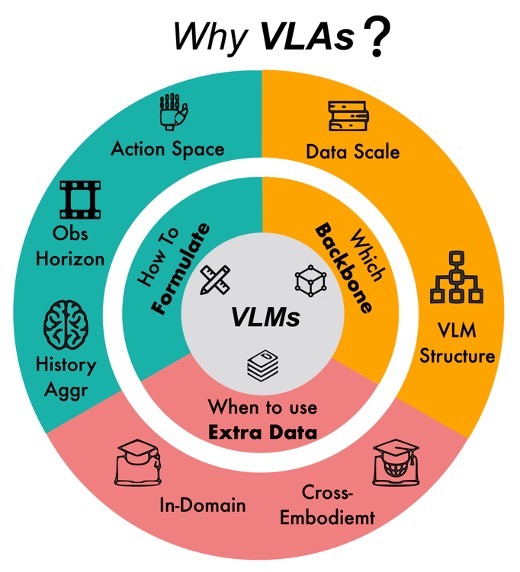

I am a research scientist at TikTok, Seattle. My primary research interests are computer vision, multi-modal models and decision making. My goal is to develop agents that can acquire knowledge from various observations and interact with the physical world. I approach the goal from the following perspectives:

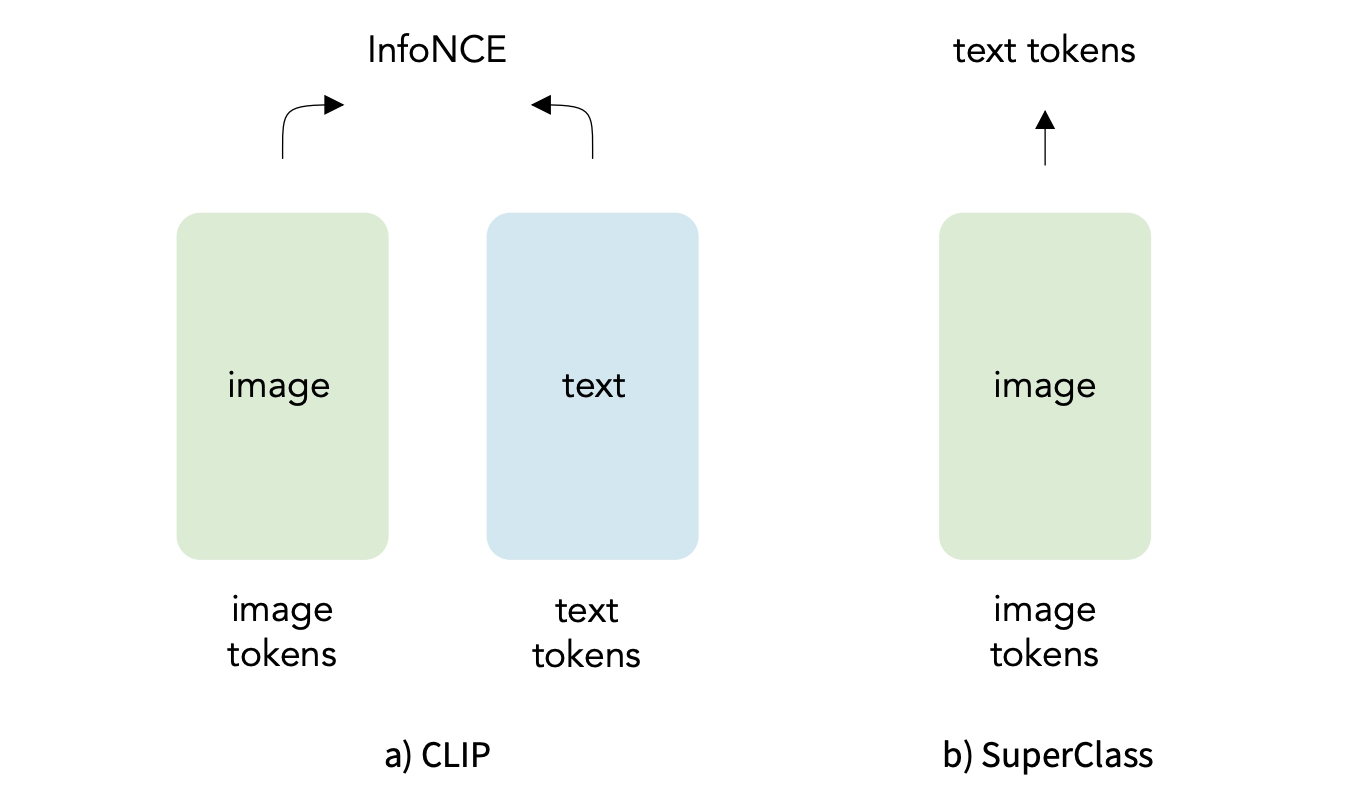

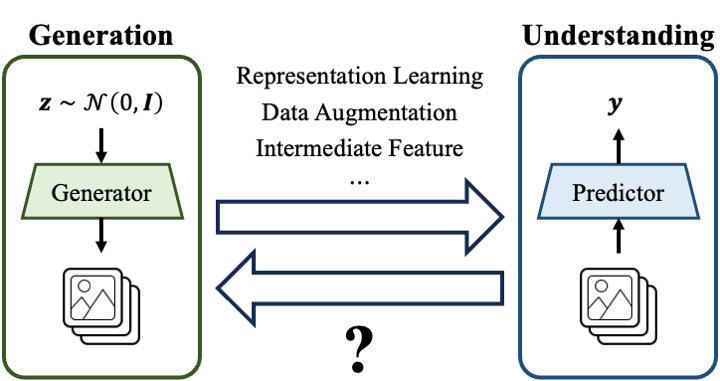

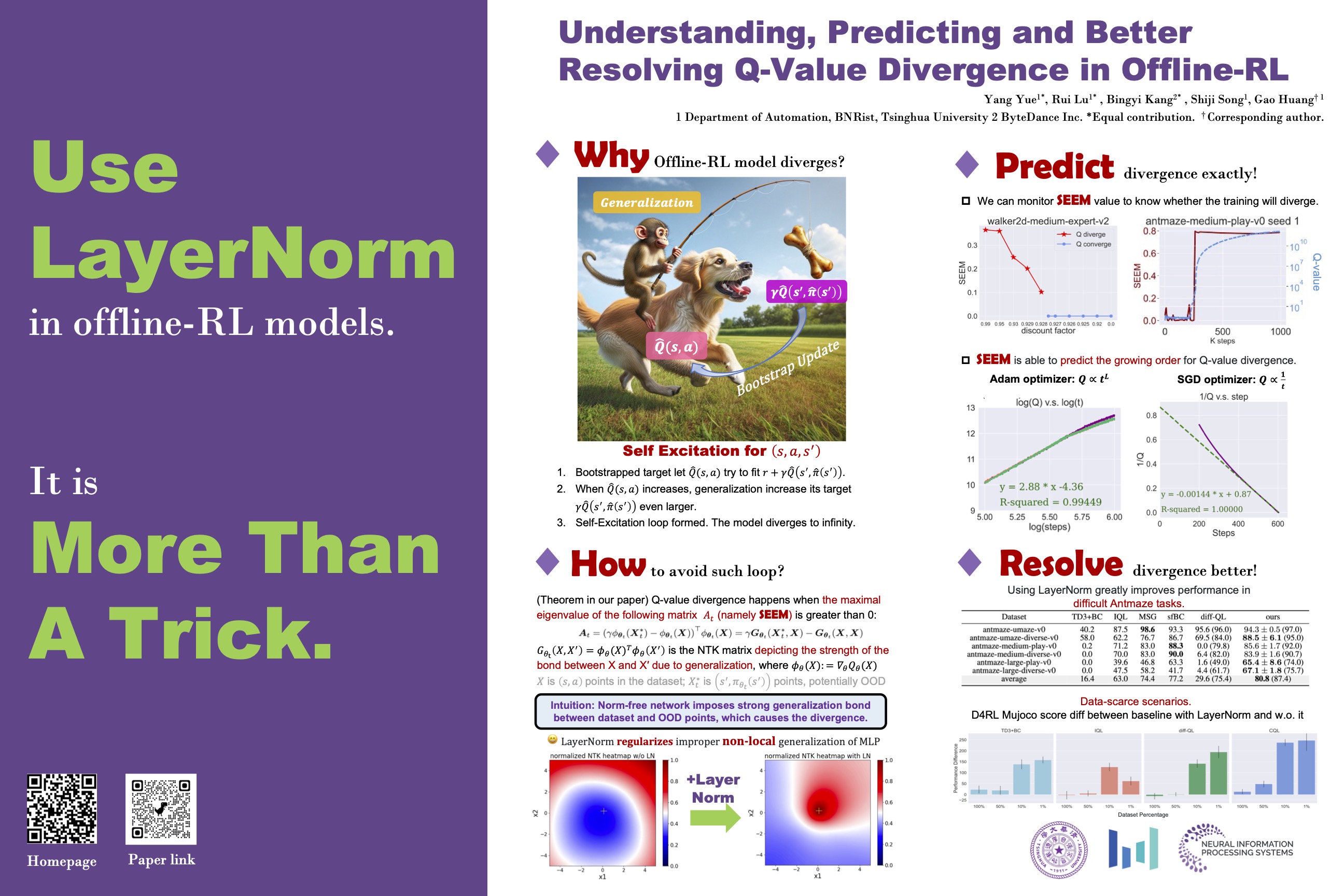

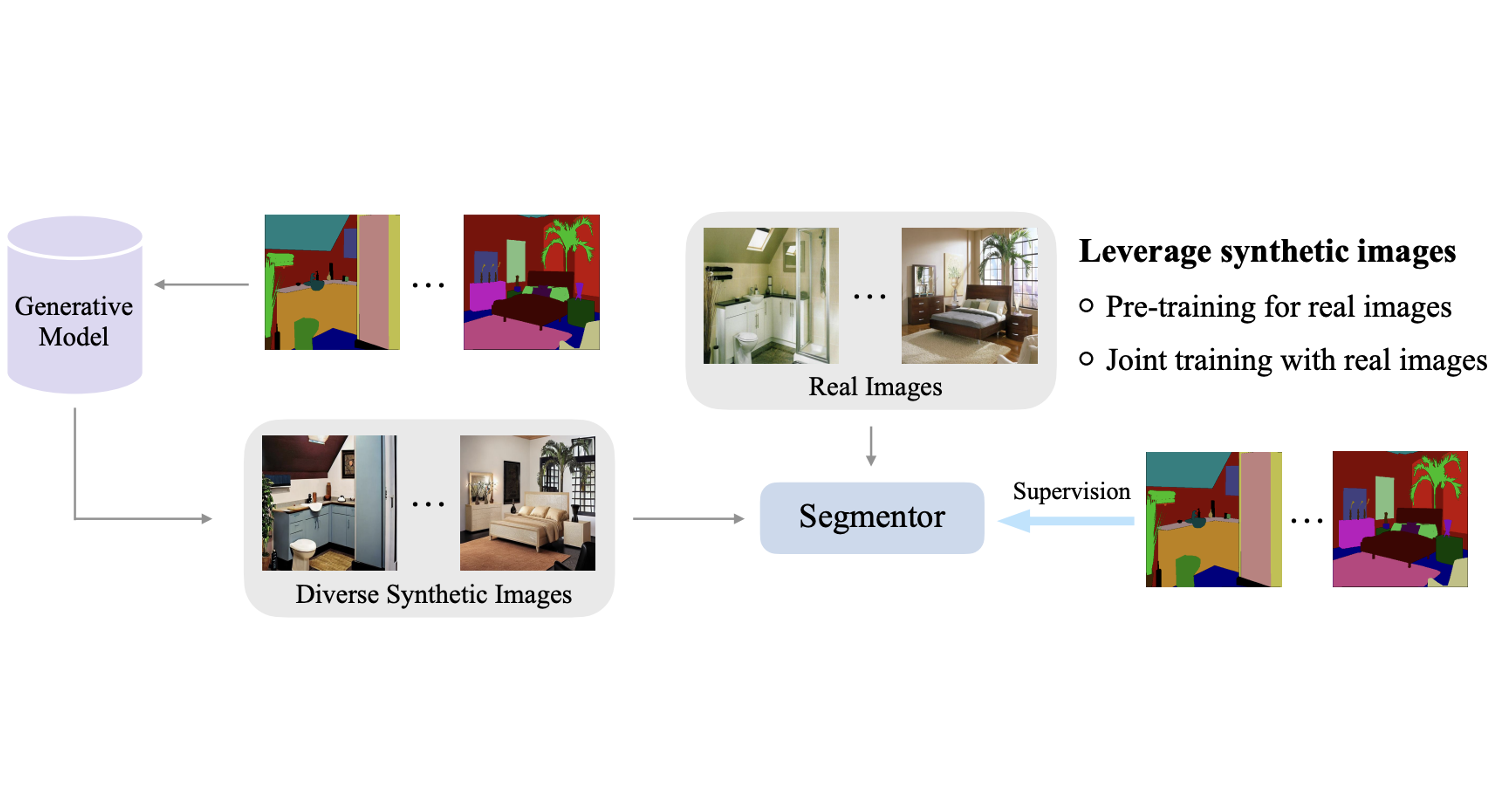

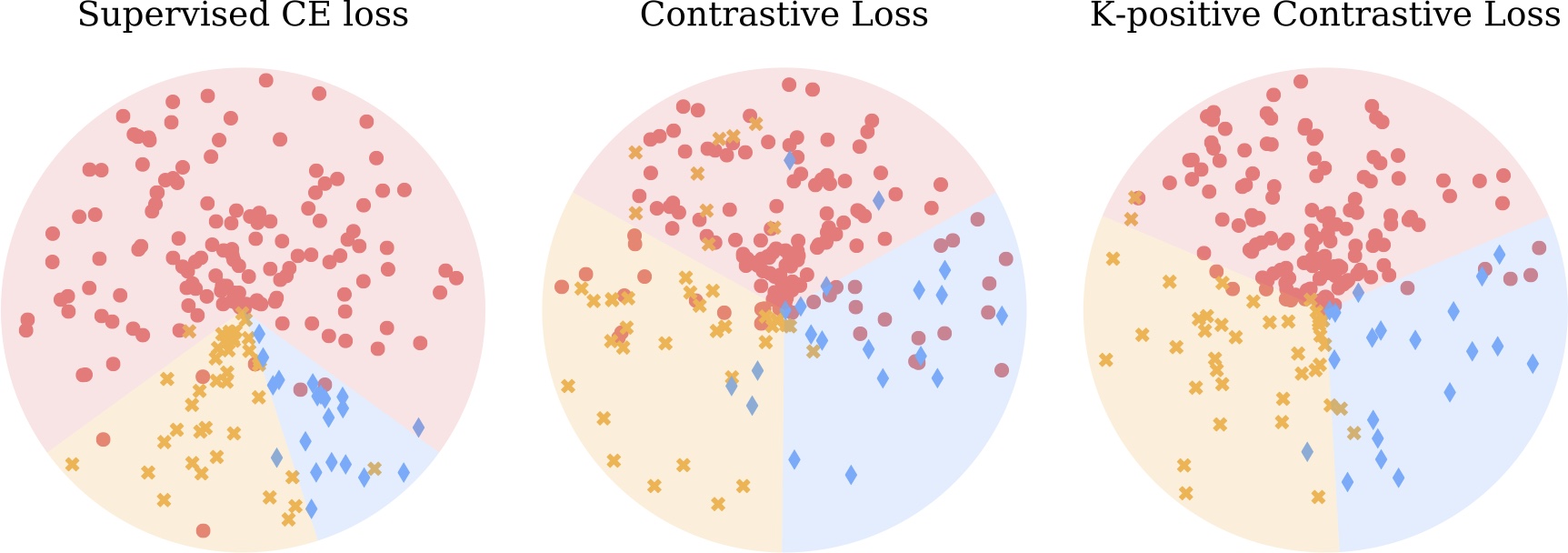

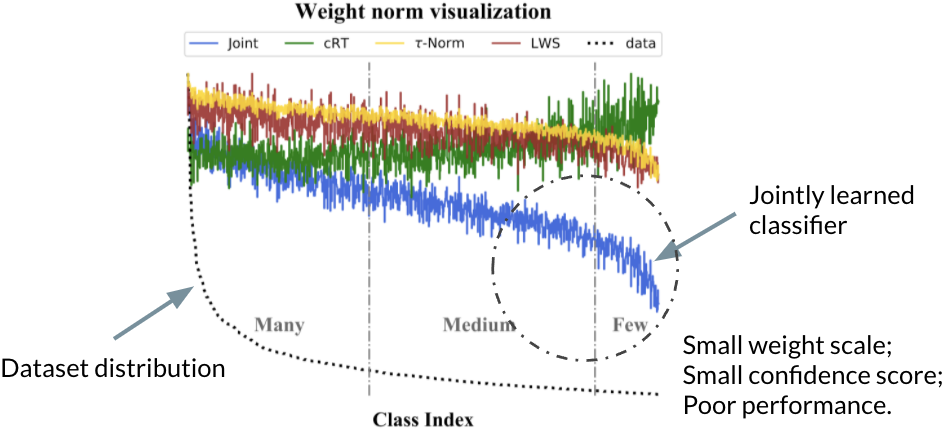

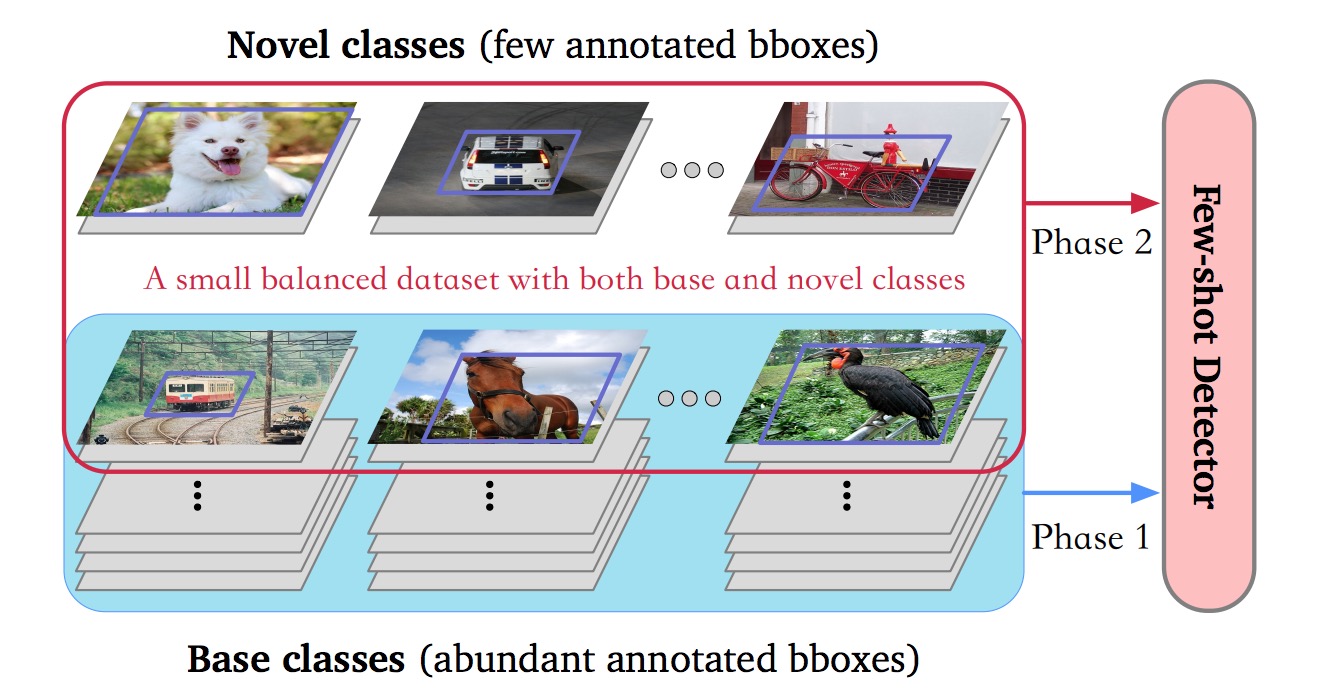

- Dealing with arbitrary data in real life (e.g., long-tailed, unlabeled, synthetic, etc).

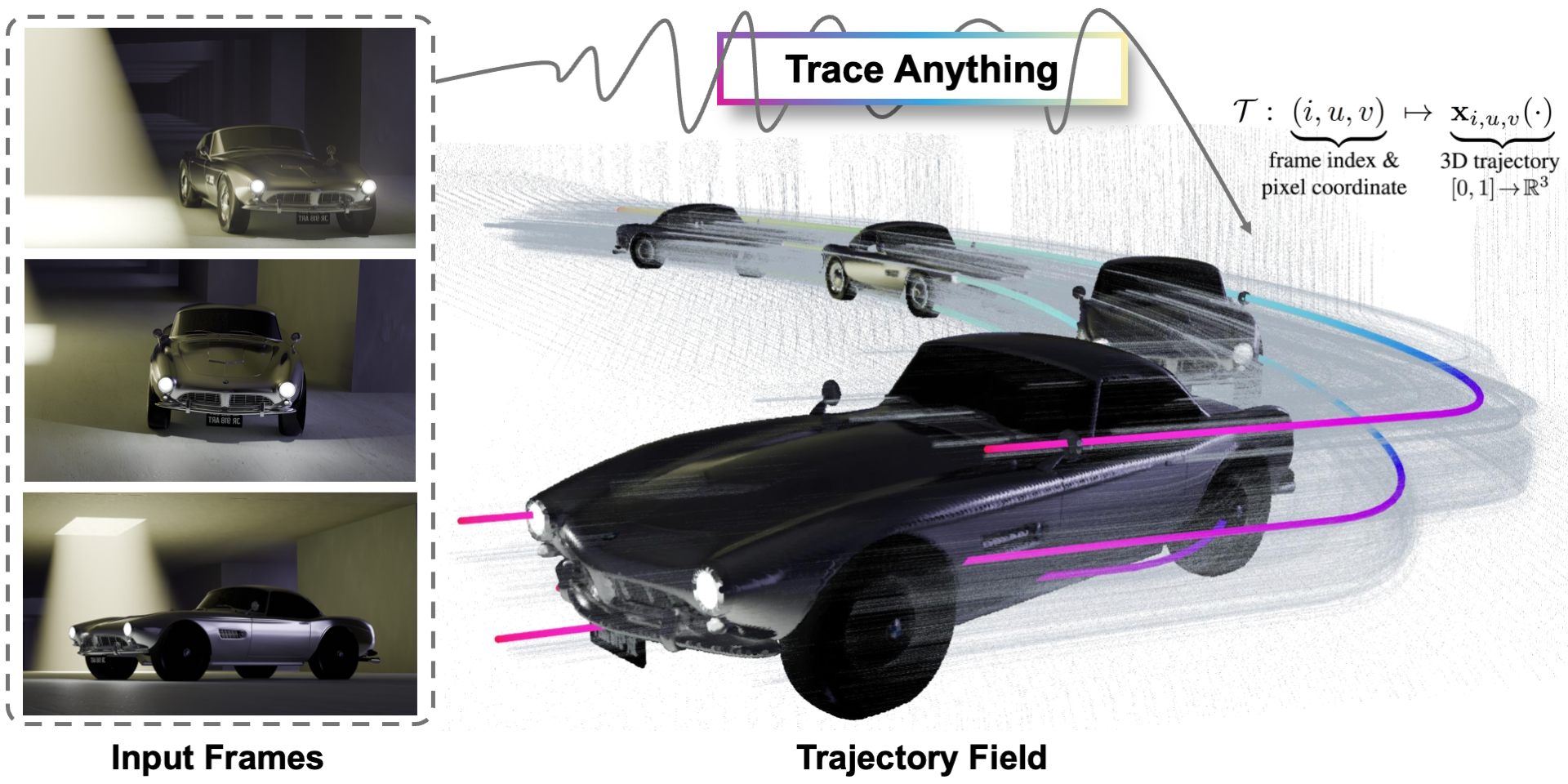

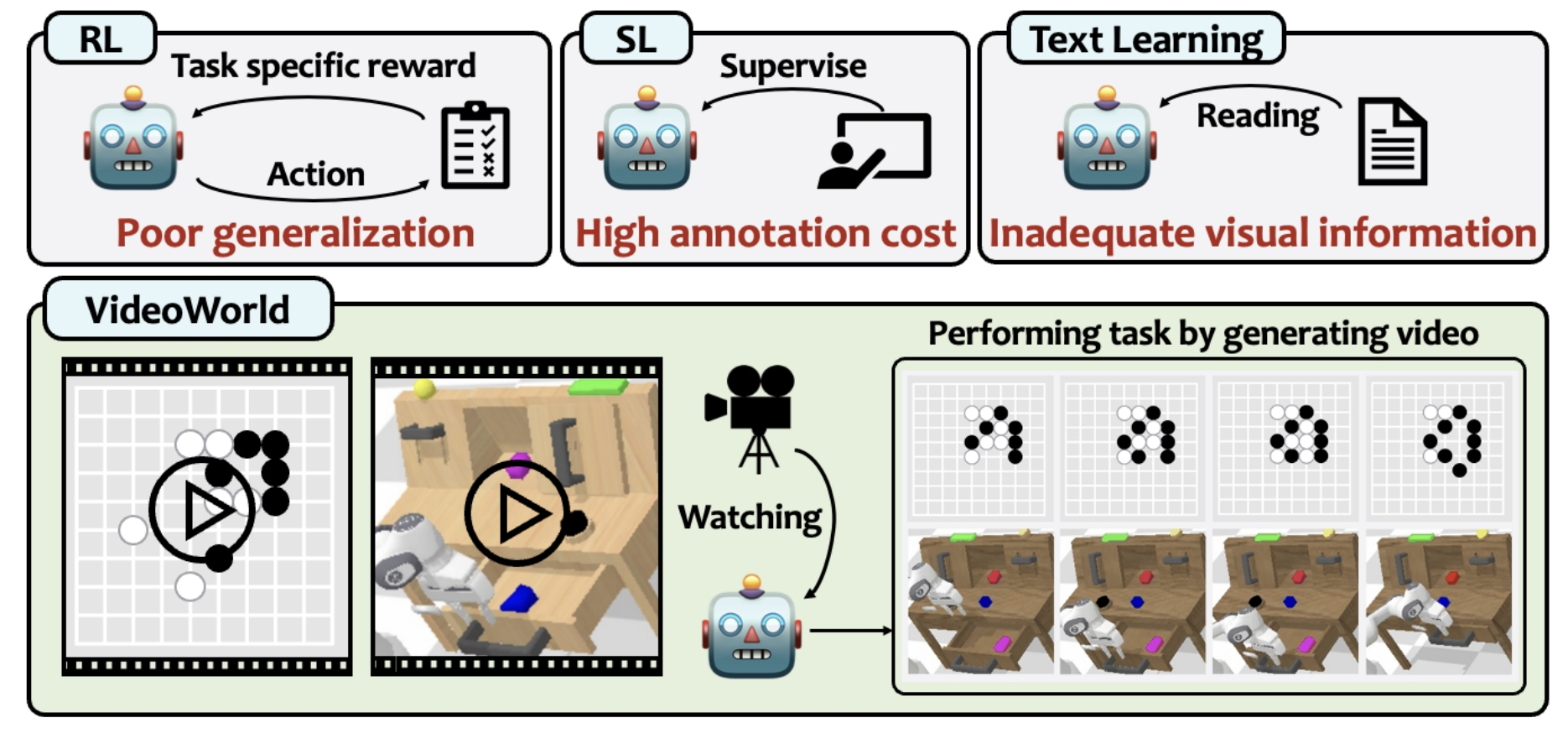

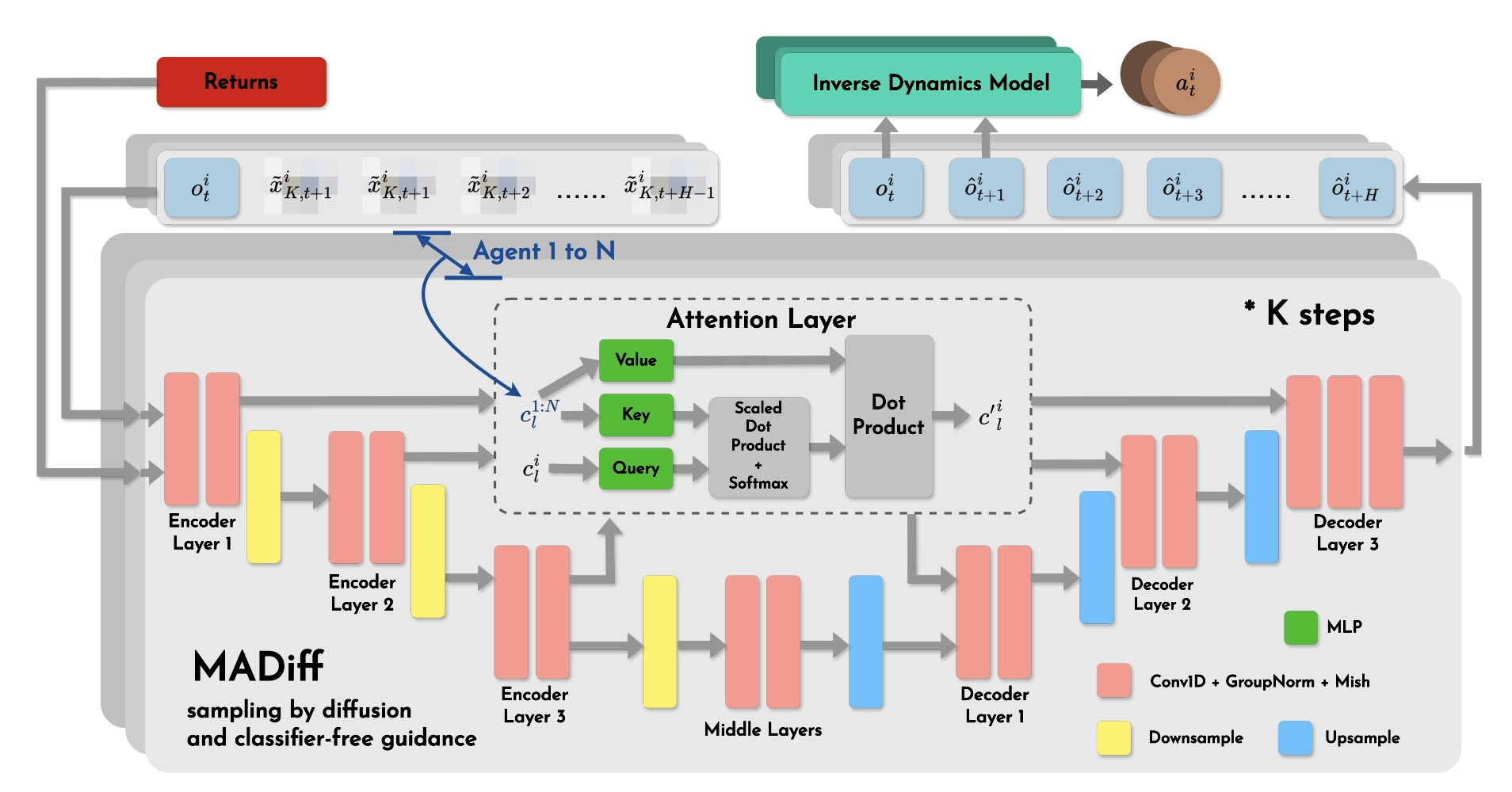

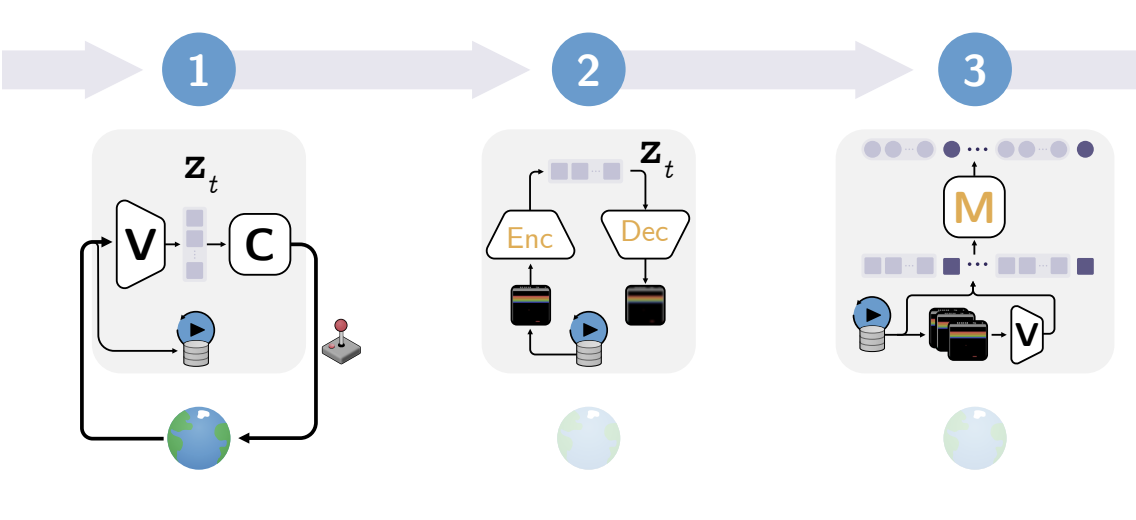

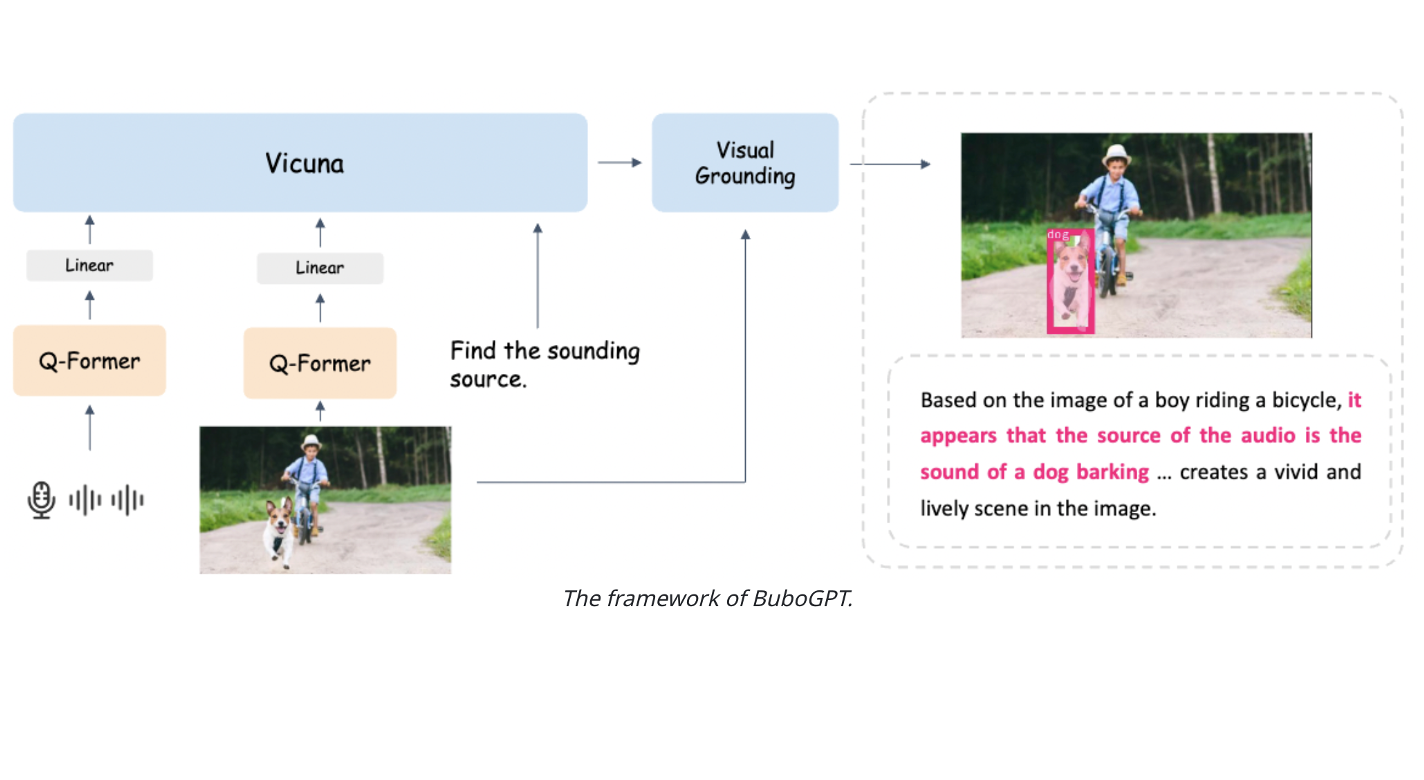

- Recovering (physical and semantic) knowledge of the world from observations.

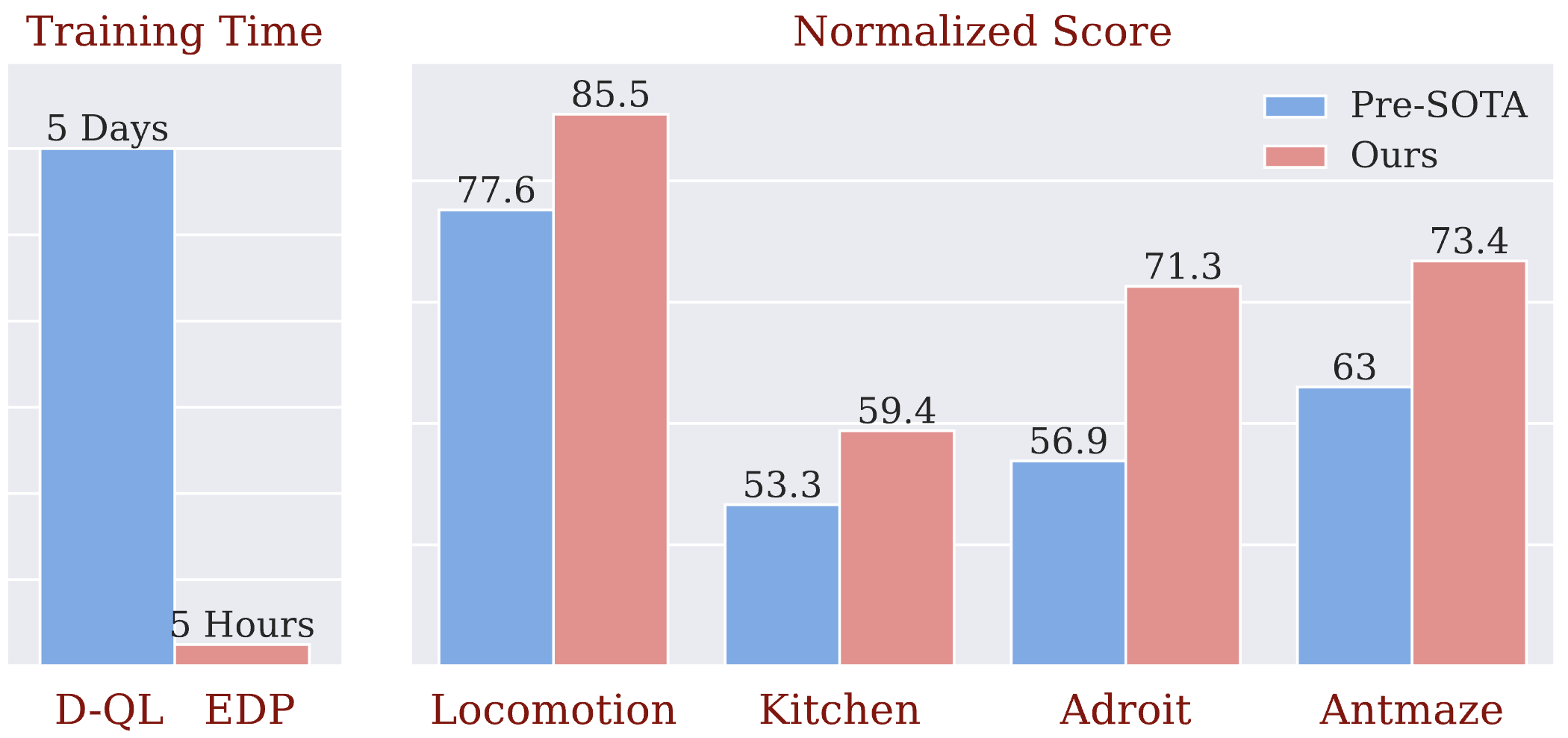

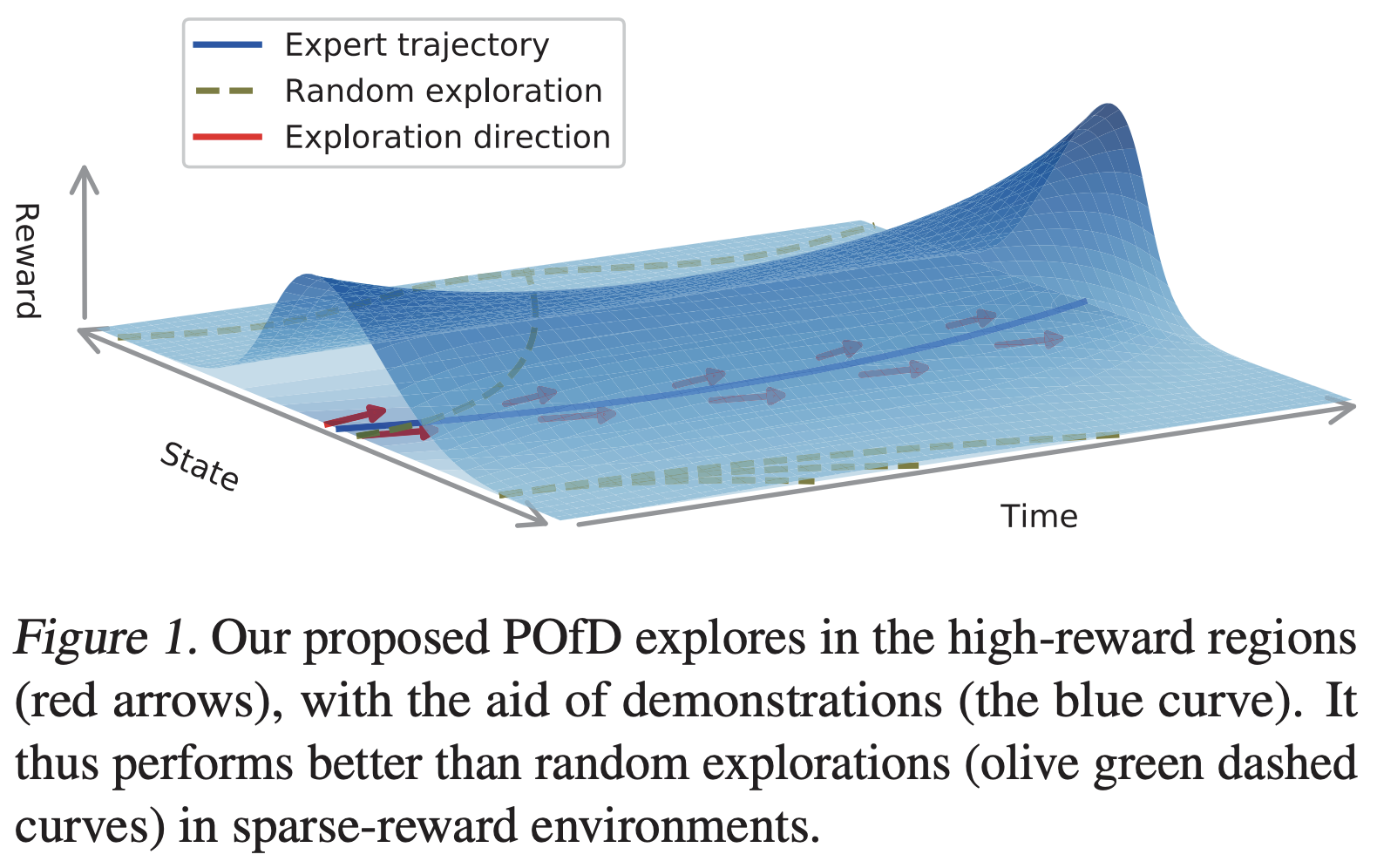

- Effectively and efficiently utilizing the knowledge for interaction.

Previously, I was a research scientist at the Sea AI Lab. I received my PhD from National University of Singapore, I was also fortunate to have worked as a visiting researcher at UC Berkeley, under the supervision of Prof. Trevor Darrell. During my PhD study, I interned at Facebook AI Research, working with Saining Xie, Yannis Kalantidis, and Marcus Rohrbach.

I am leading the development of Depth Anything series. We have multiple intern and FTE positions (on 3D&4D foundation models) open for application. Feel free to drop me an email if you are interested.